library(wooldridge)

# gpa_model <- lm(colGPA ~ hsGPA, data = gpa1)

# summary(gpa_model)

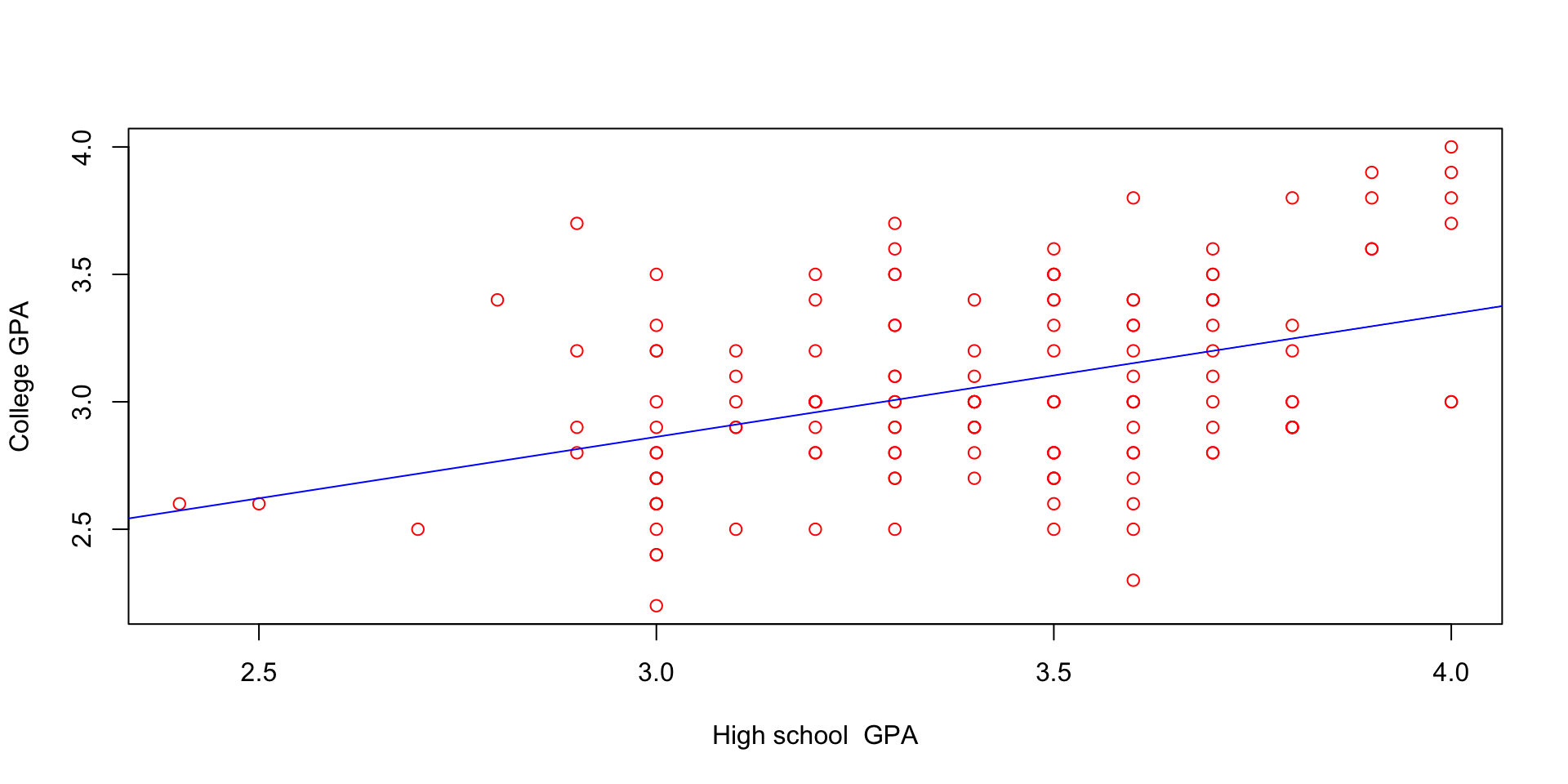

plot(gpa1$hsGPA, gpa1$colGPA,

xlab = "High school GPA",

ylab = "College GPA",

col = "red")

abline(lm(gpa1$colGPA ~ gpa1$hsGPA), col = "blue")

Topic 3: Simple Linear Regression

Simple linear regression model (SLRM): studies how \(y\) changes with changes in \(x\)

Population Model: \[y_i = β₀ + β₁x_i + u_i\]

Estimation from a sample gives: \[\hat{y_i} = \widehat{\beta_0} + \widehat{\beta_1} x_i\]

Note

Population Model: \[y_i = β₀ + β₁x_i + u_i\]

Note

1. Zero Mean: \(E(u) = 0\)

Why This Works

2. Mean Independence: \(E(u|x) = E(u)\)

Implications

Original Model

\(wage_i = \beta_0 + \beta_1educ_i + u_i\)

Where \(u\) includes ability:

Rewritten Model

\(wage_i = (\beta_0 + 100) + \beta_1educ_i + \tilde{u_i}\)

Where:

\[ wage_i = \beta_0 + \beta_1 education_i + u_i \]

Zero conditional mean implies:

\(E(y_i|x_i) = \beta_0 + \beta_1x_i\)

Interpretation

gpa1 data

\(E(colGPA|hsGPA) = 1.5 + 0.5 \, hsGPA\)

For \(hsGPA = 3.6\):

Systematic Part

Unsystematic Part

set.seed(123)

n <- 1e5

u1 <- rnorm(n, mean=0.0, sd=0.1)

x <- sin(seq(-5, 5, length.out=n))

u2 <- x + rnorm(n, mean=0, sd=0.1)

cat("Mean of u1:", mean(u1), "\n", "Mean of u2:", mean(u2), "\n")Mean of u1: 9.767488e-05

Mean of u2: 0.0005215476 We start with two key assumptions:

Using this we can show that:

Population Model: \[y_i = β₀ + β₁x_i + u_i\]

Sample counterparts

\[\frac{1}{n} \sum_{i=1}^{n}(y_i - \hat{\beta_0} - \hat{\beta_1}x_i) = 0\]

\[\frac{1}{n} \sum_{i=1}^{n}x_i(y_i - \hat{\beta_0} - \hat{\beta_1}x_i) = 0\]

Now, \(\frac{1}{n} \sum_{i=1}^{n}(y_i - \hat{\beta_0} - \hat{\beta_1}x_i) = 0\), simplifies to

\[\bar{y_i} = \hat{\beta_0} + \hat{\beta_1}\bar{x_i}\]

Where \(\bar{y_i} = \frac{1}{n} \sum_{i=1}^{n}y_i\) is the sample average of the \(y_i\).

Thus,

\[\hat{\beta_0} = \bar{y_i} - \hat{\beta_1}\bar{x_i}\]

Plug in \(\hat{\beta_0}\) into: \(\frac{1}{n} \sum_{i=1}^{n}x_i(y_i - \hat{\beta_0} - \hat{\beta_1}x_i) = 0\) and simplifying gives us the slope coefficient \(\hat{\beta_1}\):

\[\hat{\beta_1} = \frac{\sum_{i=1}^{n}(x_i - \bar{x})(y_i - \bar{y})}{\sum_{i=1}^{n}(x_i - \bar{x})^2}\]

provided, \(\sum_{i=1}^{n}(x_i - \bar{x})^2 > 0\). What’s this?

Note that \(\hat{\beta_1} = \frac{\sum_{i=1}^{n}(x_i - \bar{x})(y_i - \bar{y})}{\sum_{i=1}^{n}(x_i - \bar{x})^2}\) is nothing but

the sample covariance divided by the sample variance,

which can be written as,

\[\hat{\beta}_1=\hat{\rho}_{x y} \cdot\left(\frac{\hat{\sigma_y}}{\hat{\sigma_x}}\right)\]

since,

the line (characterized by an intercept and a slope) that minimizes: \[\sum_{i=1}^n \hat{u}_i^2= \underbrace{\sum_{i=1}^n\left(y_i-\hat{\beta}_0-\hat{\beta}_1 x_i\right)^2}_{\text{SSR: Squared Sum of Residuals}}\]

lead to FOC’s that are same as what we obtained as the sample counterparts of the moment conditions

that we used to derive the OLS estimates

OLS chooses \(\hat{\beta_0}\) and \(\hat{\beta_1}\) to minimize SSR

Let us implement in R and test with the lm package

Try testing these out with this generated data:

Total Sum of Squares (SST): \[ \text{SST} \equiv \sum_{i=1}^{n}(y_i - \bar{y})^2 \]

Explained Sum of Squares (SSE): \[ \text{SSE} \equiv \sum_{i=1}^{n}(\hat{y}_i - \bar{y})^2 \]

Residual Sum of Squares (SSR): \[ \text{SSR} \equiv \sum_{i=1}^{n}\hat{u}_i^2 \]

Relationship Between Sums of Squares

Total variation in \(y\) can be expressed as:

\[ \text{SST} = \text{SSE} + \text{SSR} \]

Proof Outline To prove this relationship, we can write:

\[ \sum_{i=1}^{n}(y_i - \bar{y})^2 = \sum_{i=1}^{n}[(\hat{y}_i - \bar{y}) + (y_i - \hat{y}_i)]^2 \] \[ = \sum_{i=1}^{n}(\hat{u}_i + (y_i - \bar{y}))^2 \] \[ = \text{SSR} + 2\sum_{i=1}^{n}\hat{u}_i(y_i - \bar{y}) + \text{SSE} \]

[1] 0.6510794Analysis of Variance Table

Response: dist

Df Sum Sq Mean Sq F value Pr(>F)

speed 1 21186 21185.5 89.567 1.49e-12 ***

Residuals 48 11354 236.5

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1An estimator is unbiased if, on average, it produces estimates that are equal to the true value of the parameter being estimated.

Definition

An estimator producing an estimate \(\hat{\theta}\) for a parameter \(\theta\) is considered unbiased if: \[ \mathbb{E}(\widehat{\theta}) = \theta \]

Basically means that the sampling distribution of \(\hat{\theta}\) is centered around the true \(\theta\) on average

We will now show that the OLS estimator for a SLR model’s parameters \((\beta_0, \beta_1)\) is unbiased

Linear in parameters:

In the population model, the dependent variable, \(y\), is related to the independent variable, \(x\), and the error (or disturbance), \(u\), as: \[ y=\beta_0+\beta_1 x+u \] where \(\beta_0\) and \(\beta_1\) are the population intercept and slope parameters, respectively.

Random Sampling

We have a random sample of size n,\(\left\{\left(x_i, y_i\right): i=1,2, \ldots, n\right\}\) in the population model

Sample variation in X

The sample explanatory x variable, namely, \(x_i\) , \(i \in \{1, \cdots, n\}\) , are not all the same value \[\widehat{Var}(x_i) > 0\]

The error \(u\) has an expected value of zero given any value of the explanatory variable. In other words, \[E(u \mid x)=0\] .

Theorem: Unbiasedness of the OLS estimator

Using Assumptions SLR. 1 through SLR.4,

\[ \mathrm{E}\left(\hat{\beta}_0\right)=\beta_0 \text { and } \mathrm{E}\left(\hat{\beta}_1\right)=\beta_1 \]

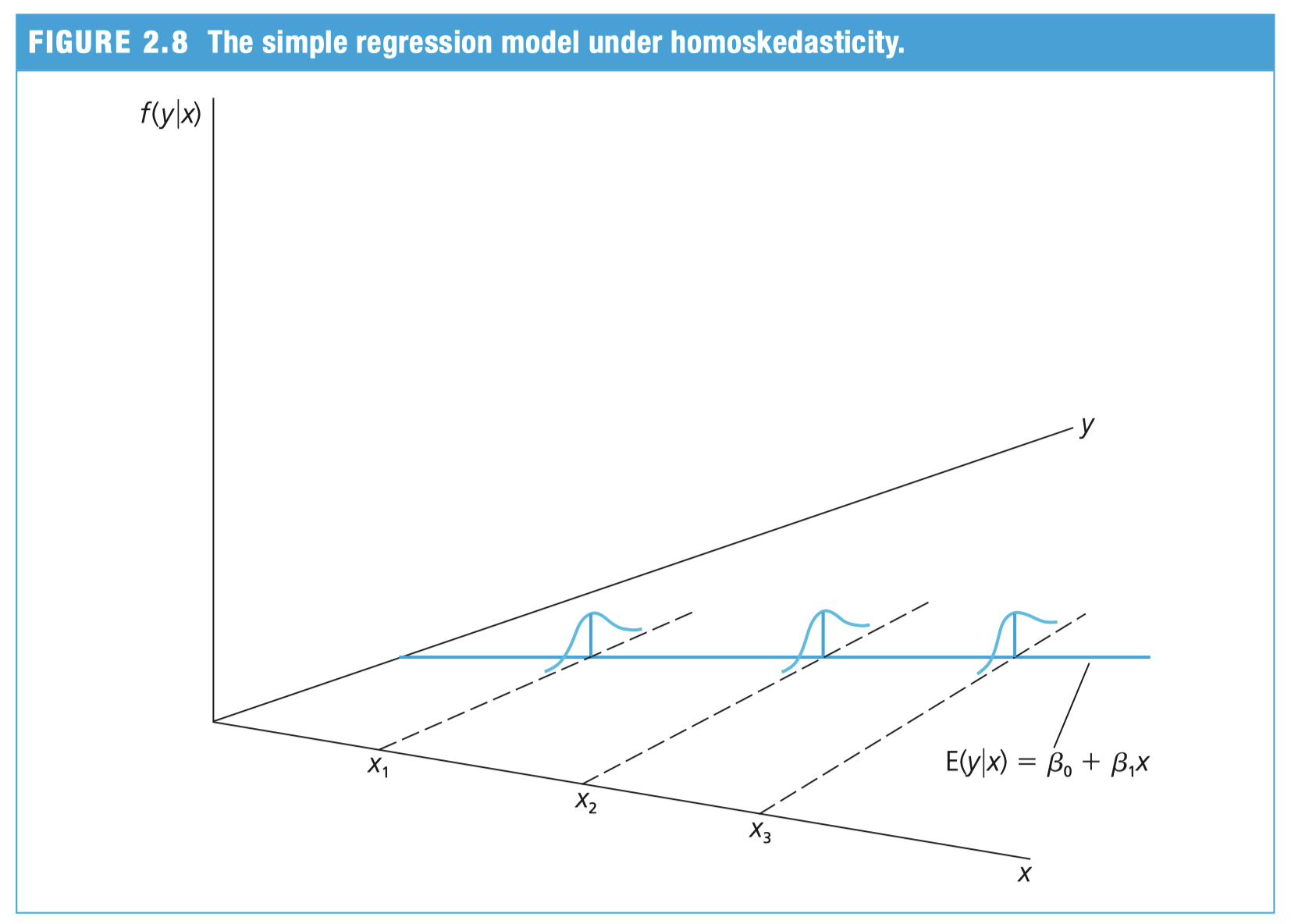

The population error \(u_i\) has the same variance given any value of \(x_i\), i.e.,

\[ Var(u \mid x) = \sigma^2 \]

SLR 5 further implies that \(\sigma^2\) is also the unconditional variance of \(u\).

\[\operatorname{Var}(u \mid x)=\mathrm{E}\left(u^2 \mid x\right)-[\mathrm{E}(u \mid x)]^2\]

\[ \text { and since } \mathrm{E}(u \mid x)=0, \sigma^2=\mathrm{E}\left(u^2 \mid x\right)\]

using Assumptions SLR. 4 and SLR. 5 we can derive the conditional variance of \(y\) :

\[ \begin{gathered} \mathrm{E}(y \mid x)=\beta_0+\beta_1 x \\ \operatorname{Var}(y \mid x)=\sigma^2 \end{gathered} \]

From here we can get \(Var(u \mid x)\)

Sampling variance of OLS estimators

Under Assumptions SLR. 1 through SLR.5,

\[ \operatorname{Var}\left(\hat{\beta}_1\right)=\frac{\sigma^2}{\sum_{i=1}^n\left(x_i-\bar{x}\right)^2} = \frac{\sigma^2}{\mathrm{SST}_x}, \]

and

\[ \operatorname{Var}\left(\hat{\beta}_0\right)=\frac{\sigma^2 \frac{1}{n} \sum_{i=1}^n x_i^2}{\sum_{i=1}^n\left(x_i-\bar{x}\right)^2} = \frac{\sigma^2 \frac{1}{n} \sum_{i=1}^n x_i^2}{\mathrm{SST}_x} \]

where these are conditional on the sample values \(\left\{x_1, \ldots, x_n\right\}\).

\[\begin{align*} \operatorname{Var}\left(\hat{\beta}_1\right) & = \operatorname{Var}\left(\beta_1 + \frac{\sum_{i=1}^{n} d_iu_i}{SST_x}\right) \\ & = \operatorname{Var}\left(\frac{\sum_{i=1}^{n} d_iu_i}{SST_x}\right) \quad \text{skipping 2 steps} \\ & = \frac{1}{\left(SST_x\right)^2} \sum_{i=1}^{n} d_i^2 \operatorname{Var}(u_i) \quad \text{skipping 3 steps} \\ & = \frac{\sigma^2}{SST_x} \end{align*}\]

Unbiased estimation of \(\sigma^2\)

Under Assumptions SLR. through SLR.5,

\[\mathrm{E}\left(\hat{\sigma}^2\right)=\sigma^2\]

where \(\hat{\sigma}^2=\frac{1}{n-2} \sum_{i=1}^n \hat{u}_i^2\), where \(\hat{u}_i=y_i-\hat{\beta}_0-\hat{\beta}_1 x_i\)

Assuming SLR 1-5, and conditional on the sample values \(\left\{x_1, \ldots, x_n\right\}\), we have shown that:

Warning

Heteroskedasticity is common in cross-sectional data (wages, firm sizes, housing prices)

library(lmtest) # for BP test

library(sandwich) # for robust SEs

# Generate data with heteroskedasticity

set.seed(456)

n <- 1000

educ <- rnorm(n, 12, 3)

exper <- runif(n, 0, 20)

u <- rnorm(n, 0, sd = 0.5 + 3*educ^2)

wage <- 10 + 2*educ + u

model <- lm(wage ~ educ)

# Breusch-Pagan test for heteroskedasticity

bptest(model)

studentized Breusch-Pagan test

data: model

BP = 184.4, df = 1, p-value < 2.2e-16In the lm function, use:

library(sandwich)

library(lmtest)

model <- lm(wage ~ educ)

coeftest(model, vcov = vcovHC(model, type = "HC1"))

t test of coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 9.4847 76.1247 0.1246 0.9009

educ 2.3196 7.1087 0.3263 0.7443

Call:

lm(formula = wage ~ educ)

Residuals:

Min 1Q Median 3Q Max

-2058.77 -251.34 -4.47 264.48 2258.63

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 9.485 69.421 0.137 0.891

educ 2.320 5.545 0.418 0.676

Residual standard error: 515.7 on 998 degrees of freedom

Multiple R-squared: 0.0001753, Adjusted R-squared: -0.0008265

F-statistic: 0.175 on 1 and 998 DF, p-value: 0.6758| Specification | Change in x | Effect on y |

|---|---|---|

| Level-level | +1 unit | +\(b_1\) units |

| Level-log | +1% | +\(\frac{b_1}{100}\) units |

| Log-level | +1 unit | +\((100 \times b_1)\%\) |

| Log-log | +1% | +\(b_1\%\) |

Note

Linear in linear regression means linear in parameters not variables

next class we will start chapter 3