library(wooldridge)

wage_data <- wage1

reg1 <- lm(log(wage) ~ educ + exper + tenure, data = wage_data)

summary(reg1)

Call:

lm(formula = log(wage) ~ educ + exper + tenure, data = wage_data)

Residuals:

Min 1Q Median 3Q Max

-2.05802 -0.29645 -0.03265 0.28788 1.42809

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.284360 0.104190 2.729 0.00656 **

educ 0.092029 0.007330 12.555 < 2e-16 ***

exper 0.004121 0.001723 2.391 0.01714 *

tenure 0.022067 0.003094 7.133 3.29e-12 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.4409 on 522 degrees of freedom

Multiple R-squared: 0.316, Adjusted R-squared: 0.3121

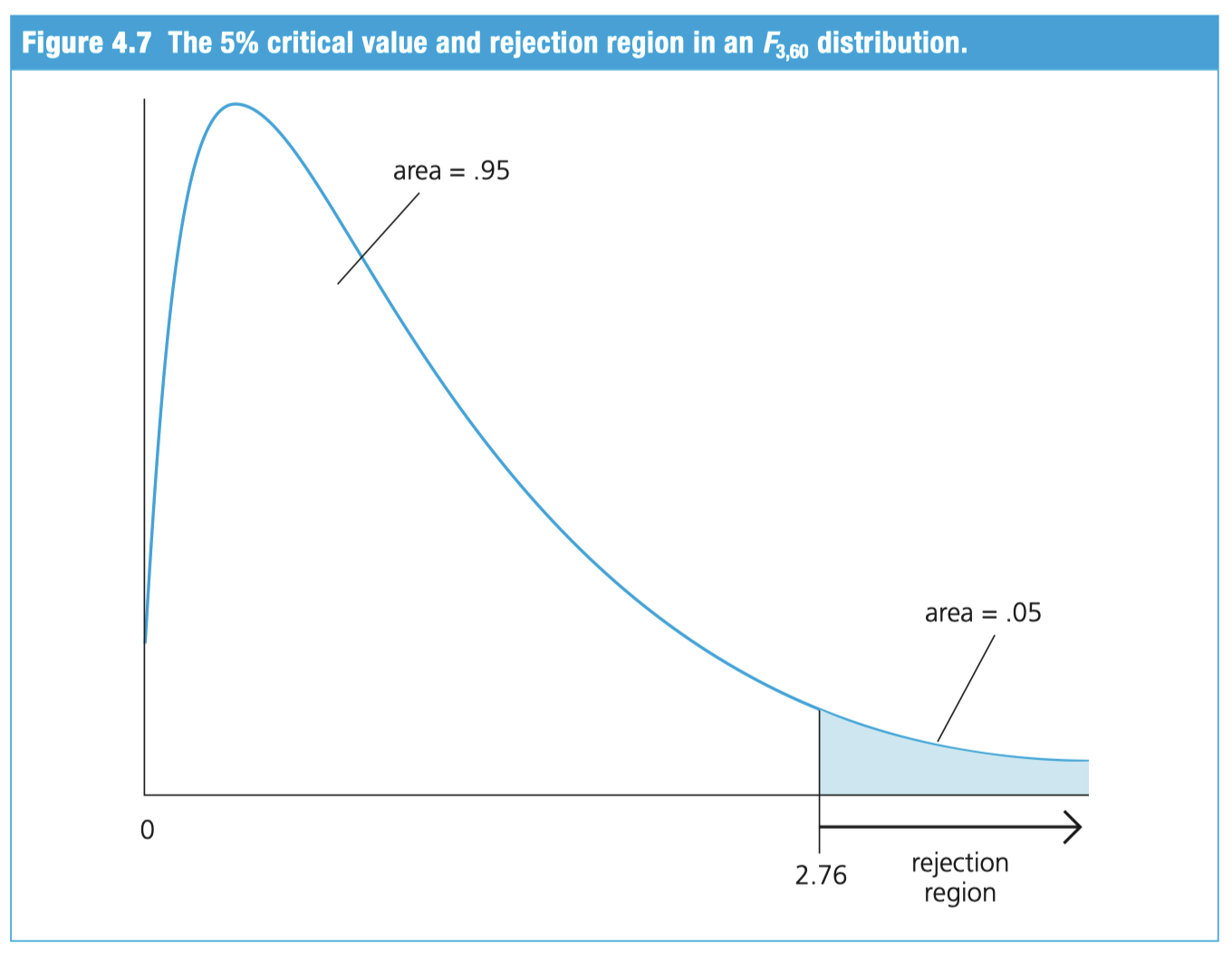

F-statistic: 80.39 on 3 and 522 DF, p-value: < 2.2e-16